Data is a top priority for many in the asset-intensive industries. Digital transformation and sustainability run on data availability.

Integration in the cloud supports wide-ranging initiatives from predictive modeling of oil well maintenance to reliability-centered inspections and ESG reporting.

With data open for consumption, teams have the flexibility to develop industrial intelligence for smarter, more efficient operations. However, that isn’t always the case.

There are a few common blockers to making data available in the cloud.

- Data access

- Insufficient data

- Data standardization

- Lack of context

- Data quality

Data Access for Industrial Intelligence

Data scientists and engineers gain a foothold on data when quality, context, standardization, and retrievability are guiding principles for industrial data management.

As at many companies, time-series data at Chevron is first collected in operational technology (OT) and supervisory control and data acquisition (SCADA) systems.

Seeing this opportunity for enterprise-wide monitoring, reporting, and analytics, Chevron’s Time-Series Services needed to provide data scientists and engineers with easily retrievable operational data that could facilitate continuous improvement of data quality, context, and standardization. In addition, the scale of transformation required Chevron to seek a new approach.

Chevron turned to Fusion to get past these challenges and to put 10 years of historical data and counting into its data lake on Microsoft Azure.

Design Features of On-Premise Systems

There are a few standard approaches to data movement to the cloud, but each has its drawbacks. In general, OT and SCADA systems work well as repositories of data aligned to specific business units. However, they lack the extensibility the cloud needs for Big Data initiatives, like Industrial AI/ML. The common blockers noted above arise for a variety of reasons. Some common causes of challenges include:

- Paywalls: licensing and user permissions make data sharing in the cloud expensive

- Firewalls: Cybersecurity concerns keep data in on-premise systems

- Data compression: Movement of data to the cloud takes a toll on data quality and the resulting analytics, often due to the loss of metadata

- Wrangling & feature engineering: Data scientists and engineers, instead of prioritizing value-added work like the development of data science models, slog away at manual tasks so data is consistent and complete

Moving Industrial Data to a Data Lake

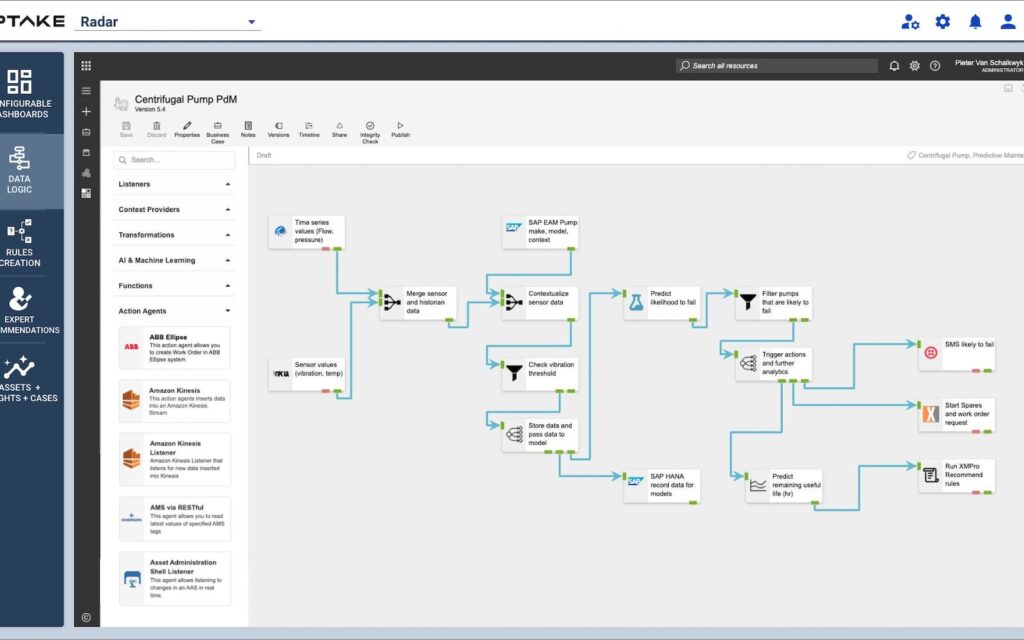

With Fusion, approved consumers leverage up-to-the-date data to stay on top of operational activity. Built-in tools from Microsoft Azure like PowerBI allow data scientists and engineers to easily develop dashboards for monitoring and reporting. Fusion also can feed data into Uptake Radar to configure and visualize analytics, workflows, and dashboards for decision support.

Data consumers are able to take advantage of more sophisticated analytics like Industrial AI/ML and digital twins because Uptake Fusion preservers metadata in an open and secure format when it moves OT data to Microsoft Azure.

BY TEAMING WITH UPTAKE AND MICROSOFT TO CONNECT AND ALSO AUTOMATE COMPLEX DATA, WE ARE ABLE TO UNLOCK THE INSIGHTS TO HELP CHEVRON DELIVER ON HIGHER RETURNS AND LOWER CARBON FOR OUR ENERGY FUTURE”

— ELLEN NIELSEN, CHIEF DATA OFFICER, CHEVRON

Realizing a Lower Carbon Future at Chevron

By deploying Fusion, Chevron leverages its data at scale to develop intelligence that unlocks valuable outcomes for their business and environmental, social, and corporate governance (ESG) initiatives. It helps them to maximize output and forecast production, improve revenue growth, and advance its sustainability initiatives.

See How Chevron Puts Data to Work